Associated with the REU Computing for Structure, is a lecture series — The Nature of Science. This explores the changing thought structures around science because of computation. This installment deals not so much of science, but of mathematics. In it we explore the new ideas of truth and knowledge because of advances in computer science.

One such (and very famous) is solutions to xd+yd=zd.

It turns out that in general, there can be no solution to Hilbert's 10th problem. The situation of interest is when there are no solutions, for no setting of the variables will the form become zero. Otherwise the infinite procedure of trying all values will halt, with the answer. The problem is that until a solution is found, this infinite process is inconclusive as to whether any solution will ever be found.

For x in [0,1,-1,2,-2, ...]

For y in [0,1,-1,2,-2, ...]

For z ....

...

if f(x,y,z,...)==0 then

write out x,y,z,...

halt

else pass

...

Next z

next y

next x

write "no solution" // this statement is useless

What is truth? The statement "this polynomial has a diophantine solution" is either a true or a false statement. But what does that mean in terms of proof? Can all true statements be proven?

Mathematics, at the time, was undergoing careful formalization. A complete description of mathematics was attempted by philosophers Bertrand Russell and Alfred North Whitehead, in the book Principia Mathematica, rolling out between 1910 and 1913, and a second edition around 1925 through 1927. It was then also that paradoxes in logic were found, such as Russell's paradox: In a town where the barber shaves everyone that does not save himself, who shaves the barber?"

In 1931 Kurt Gödel published his incompleteness paper that showed that this sort of paradox can be pushed through in the most formal and general of ways. That any system sufficiently sophisticated to reason about simple mathematics, can be twisted upon itself to create a statement which is false only if can be proven. And therefore if the system is to be consistent (only true things can be proven), then at least this statement is unprovable.

In fact it was proven that in any sufficient complex system, if the system is to be sound — that no false statement can be proven, then it must be incomplete — that some true statements cannot be proven.

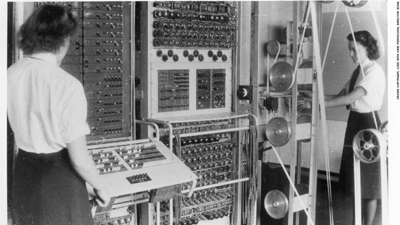

Alan Turing followed up this work but subjecting all of thought to a methodical understanding, introducing a machine, which we call now the Turing Machine, as a model for all rationality.

The machine was a computer. It calculated upon the end products of mathematics. But by this time it was understood that all reason was the end product of a calculation. Although it was the calculation of a boolean arithmetic, rather than an arithmetic upon the subject matter, be it numbers, geometric entities, or the programs themselves that calculated upon the subject matter.

TURING MACHINE

T = [Q,Σ,Γ:Q×Σ→(Q,Σ,{L,R}),F⊆Q]

A machine with a set of states Q, observing a tape of symbols.

The tape is Σ*, except it extends infinitely to the right by a special blank symbol.

The program is Γ, a transition function from states and the observed symbol on the tape,

to a new state, new symbol at that location in the tape, and a travel of one step left or right,

of the observation of the tape.

The deus ex machina sets the input on the tape initially, at a starting state, with the head leftmost.

The transition function Γ is then applied iteratively, until the machine enters one among

several halting states, F, if it ever does, which can be further labeled as accept or reject.

The result of the computation then is the contents of the tape at halting, the condition of the halting,

or that it never halts.

I find it interesting that at the time Turing was involved in breaking codes. To break a code, one only knows that some logical process produced the code. In order to break it, the space of all possible logical processes needs to be defined. Hence one can say, this code was produced by a Turing Machine. What sort of Turing Machine was it?

In Turing's reformulation, the machine was a prover, and its program an entity which implements proof. That is, a finite and stepwise logical process. The input was a statement, and the Turing machine confirmed the truth value of the statement, that is, whether it can finitely confirmed or denied by the logical process. However, it is impossible in general to know if such a program, on any particular input, will halt.

Hence the logic of system is incomplete. And truth is formally undecidable. The Turing Machine formulation makes this much easier to see. The finitary and discrete methods of proof can be twisted, in a way similar to as did Gödel, to create a self-contradictory set, that defined itself as the class of things that differ from anything that can be defined. This class is "true", in the sense that it represents a very clear set of elements. However, it cannot be recognized, because it was defined precisely as to elude all of the exhaustively listed programs. As formal reasoning is proposed to be a program, this set is beyond the reach of formal reasoning.

In practice, Turing's machines broke encryptions. And the concern was no so much decidability but efficiency. Finding ultimately the key was not the question, but rather whether the key could be found more expeditiously than exhaustive, unguided trial and error. In rough terms, exhaustive search is accomplished in the number of Turing machine steps exponential in the length of the key. A 40 bit key, a string of 40 zero's and one's, can take on one of 240 values, each to be tried to see if it works to decrypt the message.

However, for years codes have been "broken", and a more precise meaning of that is now clear. The key is deduced from samples of the encryption in much less than an exponential in the key size. Perhaps in only some polynomial equation, to some degree, in the key size. For instance, in a linear scan through the encrypted message, the number of appearances of each letter can be counted. For some codes, this count narrows down drastically the possible keys to be attempted.

A few more words on complexity. A full theory of complexity required a more robust measure than actual steps on a particular definition of a Turing Machine. In fact, things evolved until a time complexity could be a remarkably durable measure, not just to measure the performance of an algorithm (the run time for a solution to be produced), but to measure the internal complexity of the problem, thus making a hierarchy of decidable problems from least complex, requiring least run time, to more complex, requiring more run time.

A crucial step was to consider a problem to be an infinite list of instances of that problem, each with an integer size, which increases without bound. Then to consider the order of the function T(n) which is the run time to solve any instance of the problem with size n.

Given a function f:N→N, the order of the function is the set of functions:

O(f) = { g:N→N : ∃c>0, n0 ∀ n > n0, 0 ≤ g(n) ≤ cf(n) }

That is, O(f) is the class of all functions that are ultimately dominated some positive scaling of f.

Furthermore, one can consider the class of polynomially bound functions, by union of order classes for each degree, Poly(n) = ∪d O(nd). This can define a completely robust class of problems, as even very extensive changes to the machine model, while changing the run time, do not cause the run time to leave the class Poly(n).

Many problems of interest resisted the discovery a P-time algorithm that could calculate out the solution, however they all shared the property that if a possible solution was proposed, it could be verified correct by a P-time algorithm.

As a matter of formality, fix and alphabet Σ and consider the set Σ* of all finite length strings over Σ. A language L is any subset of Σ*. The problems we are considering are those of a language L that there exists a P-time verifier V for the language such that,

The class NP can be defined in several other ways.

The models and intuitions described above are all interchangeable. We will continue with the version that equips the TM with a read-only random tape.

The asymmetry is to be noted. One can demonstrate the argument for accepting an input. It will be the contents of the random tape that leads the computation to an accepting final state. The demonstration for rejecting an input is more suspect. As far as has been described, one would have to check that for all contents of the random tape, none lead to an accepting final state. The co-NP problems are those of this second sort.

Example: A graph G=[V,E] is Hamiltonian if in the graph there is a sequence of edges, leading in a cycle, which visits every vertex of the graph exactly once. The statement "G is Hamiltonian" is NP. The answer given, the verifier makes a simple check that the answer is in fact a correct answer. The statement for non-Hamiltonian is co-NP, as there might not be compact evidence for the non-existence of a Hamiltonian path.

The randomness tape has a further interpretation, that of a randomized computation.

Let the space of all possibilities for the random tape be Ω. This space is finite although its size is dependent on the input. The input of size n, will bound the computation length, and therefore it is unobservable to the TM what is written on the random tape beyond the cell numbered by the time bound. Then the machine, say a verifier V, become a sort of random variable, Vω(x), which given input x, and an experiment ω ∈ Ω, produces an output. The probability of that output is the measure of the set in Ω that would produce that output.

The class NP is believed to encompass a wider class of problems than does the class P (P-time). The class however isn't too large. It is exactly those problems that can be verified in P time, and hence that yield to exhaustive trials of the state space. It represents a natural model for proof, in which the genesis of a proof is inspired, that is to say, might not be constructible in P-time, but once the proof is written down, its verification is mundane.

NP represents the added power of having a helper, the prover, provide a single string that is the missing piece of the puzzle for solving for membership. It is the power of interaction, where one party in the interaction is P-time bound, and the other has computational powers that we do not restrict.

To further explore the power of interaction, two Turing machines are proposed, a prover P and a verifier V, that form a system of computation, denoted (P,V), which share at least four tapes,

Typically, we shall a private random tape for V, and demand that V be P-time bounded. P however can be unbounded, and can have a private random tape if it wishes. However, since P is unbounded it can simulate a random tape by exhaustive enumeration.

Let L be a language. We then define two properties of the IP system's computation.

In the formalism of IP, languages that are NP have perfect completeness and perfect soundness. The Verifier verifies. It cannot be convinced otherwise than the truth, and it is always convinced of the truth. In this situation, the probabilities referenced above are both 1. Therefore the random-tape is unnecessary for the verifier — any setting of that tape gives a correct answer, so set it to, e.g. all zeros, in fact, do away with it altogether.

In addition, once randomness is removed from V, only a single message from P to V is needed, as P can calculate for itself P's side of the computation, and supply in a single, unprompted message, all the appropriate responses.

We have arrived at the edge of the known. We take on step into the unknown.

What if we allowed the verifier to have imperfect soundness? It is important that we relax soundness, as relaxing completeness actually makes no difference. However, by relaxing soundness, we are allowing the verifier to be "fooled" by the prover with some likelihood. Say 1/3 of the time, depending on the random tape of the verifier. Then it turns out that (P,V)(x) can accept a vastly increased class of languages. In fact, the system is as powerful as P-space computation. (We won't define this.)

Example: Accepting non-isometric graphs. Given two graphs, G1 and G2, they are isomorphic if there is an vertex correspondence that preserves edges. Finding an isomorphism is hard. Not harder than trying all vertex correspondences and checking, in P-time, if the correspondence is an isomorphism. In prover-verifier style, the prover sends the verifier the alleged isomorphism and the verifier verifies it. It is a single hint message, the the problem of isomorphism is in NP.

The co-NP problem of non-isomorphism can be solved in IP as follows. The verifier picks one of G1 or G2, and creates an isomorphic disguise and sends this to P. P returns 1 or 2, according to which of the two graphs were chosen by the verifier.

Completeness: if the graphs are not isomorphic, P can decide which of the two graphs was the source of the verifier's isomorphism, answer correctly, and have V accept.

Soundness: if the graphs are isomorphic, P receives as graph isomorphic to both and cannot guess which of the two was V source. It can answer at random and verifier will be fooled with the probability that the random guess is correct, i.e. probability 1/2.

Note that: Although soundness is not complete, the probability that the verifier is fooled can be made exponentially close to 0. Since completeness is perfect, the verifier can repeat for a polynomial number of times the protocol, and reject if any run rejects. Returning to the algorithm for non-isomorphic graphs, the probability for soundness is now (1/2)p(n), where p(n) is some (possibly high degree) polynomial.

Often, this means the prover sending the solution to the problem. If verification is P-time, this both convinces the verifier that x is in the language, but provides in addition, the solution to a problem.

Example: For graph isomorphism, the prover sends the isomorphism, and the verifier verifies. This both,

A zero-knowledge proof has the advantage that the protocol is not transferable. If the verifier learns of a truth in zero-knowledge, it cannot serve as a prover for other verifiers, to transmit that knowledge. If, however, the verifier was given the isomorphism between the two graphs, if could present that to any inquirer and settle the matter. However, in zero-knowledge, since the verifier learns nothing besides the truth of the proposition, it cannot convince any other computational machine of the truth. It is a private decision made by the verifier.

These are useful in cryptography, e.g. in proof of identity protocols. A password scheme is not zero-knowledge, as the verifier verifies identity, but also learns the password, and can impersonate the prover but repeating the password. In a zero-knowledge proof of identity, the verifier is convinced the prover knows the password, but learns nothing about that password, other than that the verifier knows it.

To move forward, a definition and a technique are required,

In our context, knowledge is what the verifier learns from the prover. Therefore what the verifier "knows" without interaction from the prover, is not knowledge.

Our full definition of IP show allow that the verifier have two sided error; we will go back to considering imperfect completeness as well as imperfect soundness. This gives us the class of problems (algorithms) called BPP, Bounded Probabilistic Polynomial time.

BPP: BOUNDED PROBABILISTIC POLYNOMIAL TIME Given a language L, L ⊆ Σ*, a probabilisitic Turing Machine B accepts L in BPP has the properties,

By a process of voting, the gap between the probability of correctly identifying the x, and being fooled, can be made exponentially close to their ideal: 1 for x ∈ L, and 0 for x ∉ L.

In the definition of IP, we chose to present a version that immediately required completeness to be perfect. We reiterate that any language with an IP proof with imperfect completeness also as an IP proof with perfect completness.

This work brings us to the definition: knowledge is that which cannot be computed in BPP from known facts. It was what was transmitted by the prover to the verifier to allow it to make a decision, mostly correctly, that it could not have made without the interaction.

To prove an IP proof is also zero-knowledge, then, is provided by the notion of a simulator, a BPP machine, without any special knowledge, that can substitute for the prover in the IP protocol. Considering the interaction between P and V, the contents of the communication tapes, to be a random variable, the simulator S will sample from that random variable with the exact distribution as the authentic computation with P, on the assumption that x ∈ L. The verifier then could itself have provided the interaction, so under the assumption x ∈ L, the verifier learns nothing.

When x ∉ L, the simulator produces an improperly biased sampling, that which would probably cheat the verifier, hence the verifier does learn the truth of the proposition when interacting with P, rather than S, but nothing more.

Example: A zero-knowledge proof of graph isomorphism. P sends to V an isomorphic copy of G1, which is also isomorphic to G2, since the two graphs are isomorphic. V picks at random i ∈ {1 ,2 } and challenges P to produce an isomorphism between the sent graph and Gi.

In the case that the graphs are isomorphic, P completes the protocol. Else, with probability 1/2 P cannot complete the protocol.

A simulator S can replace P by choosing a random isomorphism from Gi, where i is either guessed, or the simulator includes a copy of V inside its code, complete with V's random tape, to know, rather than guess, the response i of V. In the formalism, the S can be defined as producing an "I don't know output", ⊥, and the simulation is considered successful if the distribution of outputs, conditioned on the simulator not outputting ⊥, is identical to the distribution of outputs of P.

Example: Returning to the protocol for non-isomorphic graphs, we not this protocol is not zero-knowledge. The brings up an element captured by the notion of honest verifier zero-knowledge. If the verifier follows the protocol given in the non-isomorphism algorithm, it might be that the protocol is zero-knowledge. However a cheating verifier can attempt to extract knowledge from the prover but deviating from the protocol. Zero-knowledge requires at no cheating prover learns more than the validity of the statement.

If can happen, in the algorithm under consideration, that V becomes aware of three graphs, two which are known non-isomorphic and a third known to be isomorphic to one of the other two. Using the provided protocol, the verifier can learn which of the two the third graph is isomorphic, by presenting the third graph as the challenge graph. This is not something that is BPP computable, has the cheating verifier has cheated the prover out of this knowledge.

The class BPP has been introduced, and we note that BPP, rather than P, is now considered the more natural candidate for efficiently computable functions.

author: burton rosenberg

created: 11 jul 2018

update: 12 jul 2018